![Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study] Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]](https://searchengineland.com/wp-content/seloads/2020/04/robots-gsc-fetch-block.jpg)

Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]

![Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study] Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]](https://searchengineland.com/wp-content/seloads/2020/04/robots-txt-google-docs.jpg)

Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]

![Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study] Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]](https://searchengineland.com/wp-content/seloads/2020/04/robots-communicate-large.jpg)

Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]

![Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study] Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]](https://searchengineland.com/wp-content/seloads/2020/04/robots-gsc-tester-www.jpg)

Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]

![Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study] Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]](https://searchengineland.com/wp-content/seloads/2020/04/robots-txt-redirect.jpg)

Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]

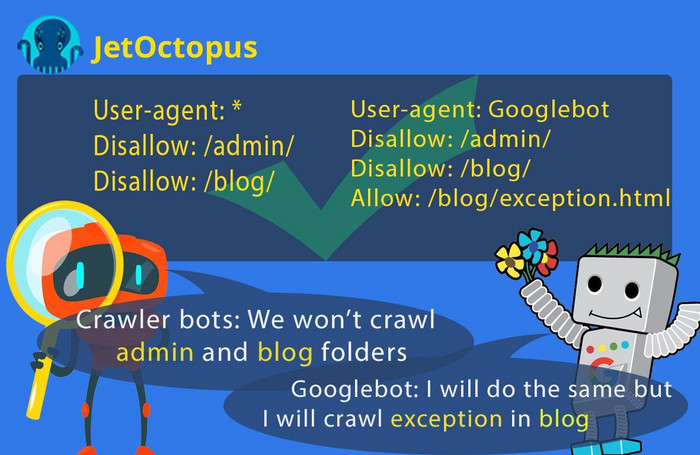

Ahrefs on Twitter: "7/ Use a separate robots.txt file for each subdomain Robots.txt only controls crawling behavior on the subdomain where it's hosted. If you want to control crawling on a different

![Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study] Mixed Directives: A reminder that robots.txt files are handled by subdomain and protocol, including www/non-www and http/https [Case Study]](https://searchengineland.com/wp-content/seloads/2020/04/robots-txt-www.jpg)